You are currently in guest mode. Log in or register to fully use the HOOU platform.

- Use all learning features, such as tests, quizzes and surveys.

- You can write posts and exchange ideas in our forums.

- We will confirm your participation in some courses.

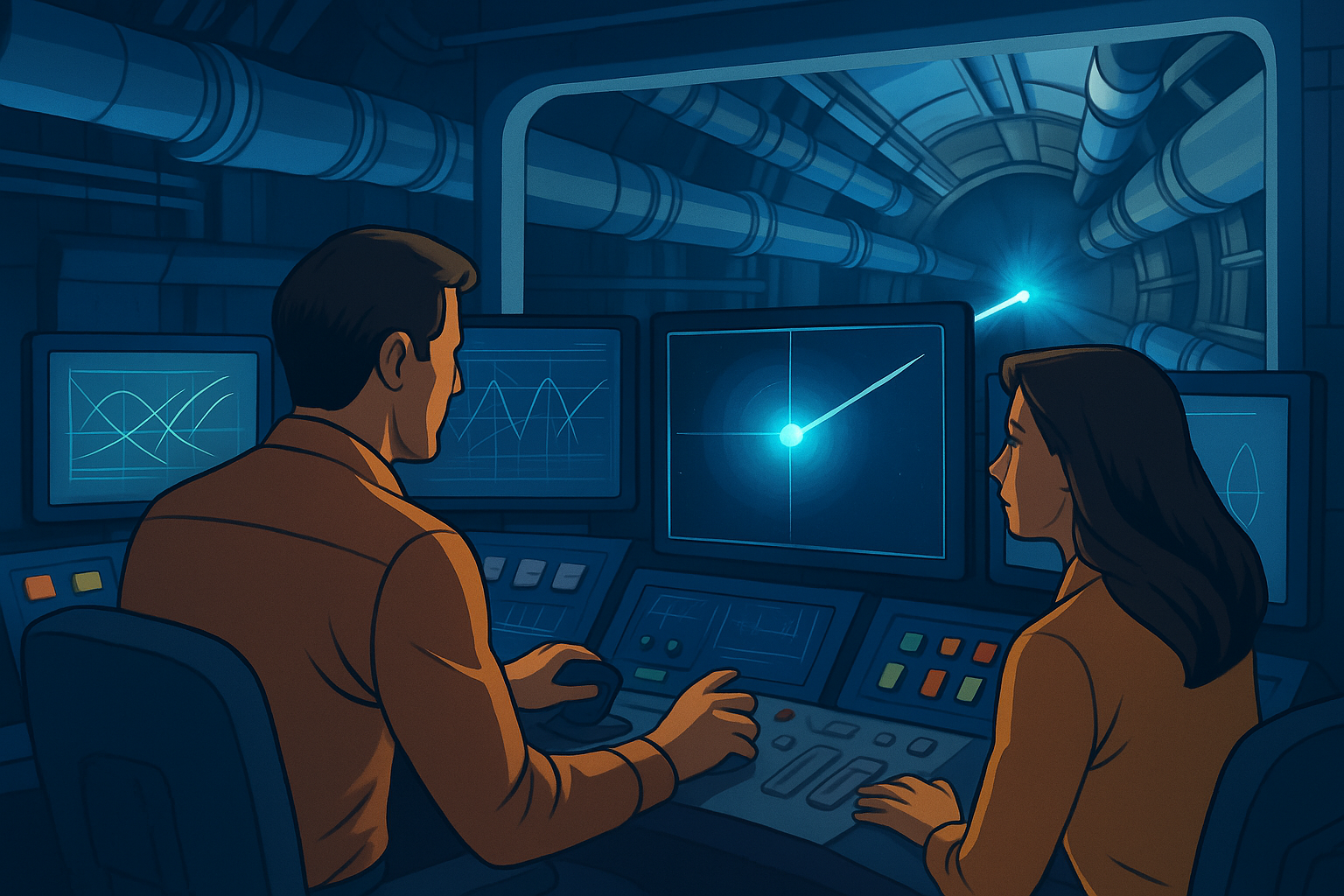

AI for Autonomous Particle Accelerators

Section outline

-

-

-

-

-

-

Particle accelerators are of great benefit to our society in the field of basic research, but also for very current and practical challenges. Particle accelerators are highly complex large-scale devices that are operated by experts with many years of experience. Research is currently being conducted into AI support for operation in order to achieve better precision and reliability, thereby increasing the performance of accelerator facilities and enabling new scientific findings.